Contributed by Rishav Dutta, Machine Learning Engineer at Sofy

Automatic Test Case Generation is transforming the landscape of mobile app testing. This process involves identifying and creating test cases for applications without human intervention, covering both visual and functional testing. For instance, an automated test case generator can take a design document as input and produce test cases to validate corresponding components, or convert written test cases into necessary automated steps.

By learning from different app iterations and builds, automatic test case generation ensures robust app testing without user input. Moreover, it can suggest test cases based on related apps, maintaining high standards across applications.

Challenges in Automatic Test Case Generation

Creating automatic test cases is complex. Solutions must be intelligent enough to adapt test cases for various applications and functions. Current techniques include using machine learning to learn app pathways, code automation analysis to create test cases, and tracking user pathways to identify tasks. These approaches face challenges, such as the need for extensive data analytics and adaptability across diverse apps.

Automatic test cases can be either rigid (unit tests required to pass before each release) or fluid (test cases that inform developers about the user’s experiences). Generating test cases needs to be complex enough to satisfy the requirements of any development team.

Despite being in its infancy, research shows promise. For example, Natural Language Processing (NLP) methods convert structured sentences into test cases using language analysis to extract the steps, enabling flexible and programmatically understandable test steps (Wang et al 2020).

Let’s look at an example of how this works:

Let’s say you want to open an app and then select the first item from a list. This can be converted into the commands app.open() and list.select(item). This method allows you to use natural language to write test cases, providing flexibility for different writing styles.

However, the technology is still developing and requires robust solutions to handle dynamic app environments effectively.

Sofy’s Approach to Automatic Test Case Generation

Sofy leverages machine learning and AI to generate context-based pathways in applications. By understanding common flows, such as adding items to a cart in retail apps, Sofy can apply these patterns across different applications with minor adjustments for visual context clues.

Sofy’s process involves:

- Contextual Pathways: Generating flows based on user actions, such as searching for a product, viewing its details, adding it to the cart, and verifying the addition.

- Context Clues: Identifying variations in visual elements (e.g., “add to cart” buttons) and adapting test cases accordingly.

Additionally, Sofy tracks app users and identifies popular pathways to create automatic test cases that ensure these critical flows remain stable across different builds. This proactive approach helps maintain a consistent user experience even as the app evolves.

Examples of How Sofy Approaches Automatic Test Case Generation

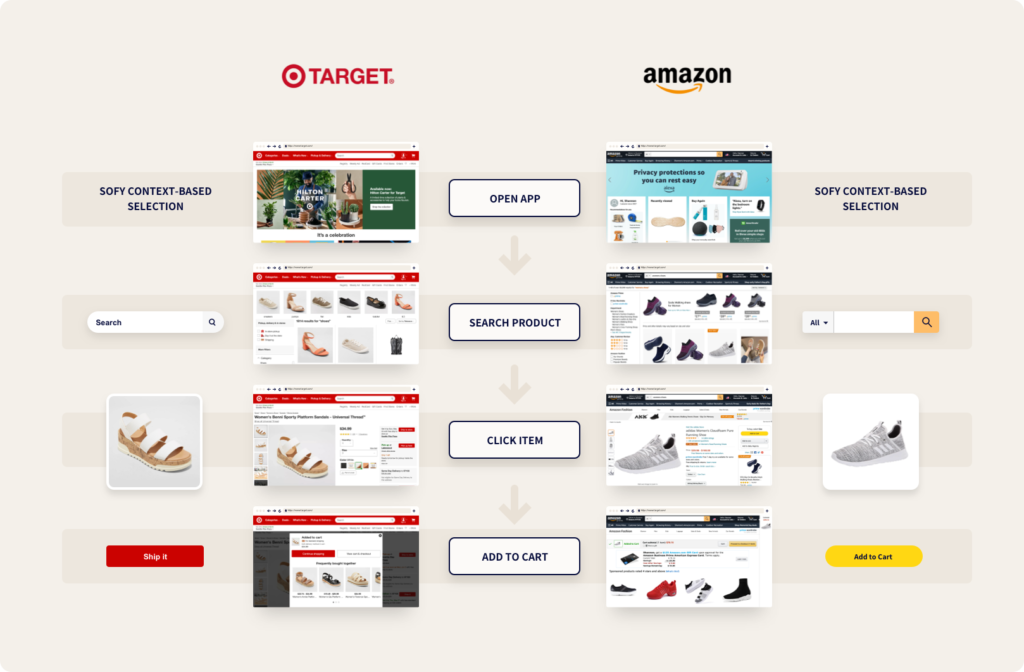

Example 1: Add to Cart

The act of adding an item to a cart is similar among different retail apps. You can follow these steps in most apps:

- Search for a product.

- Click on the product page.

- Verify that the item was added to the cart.

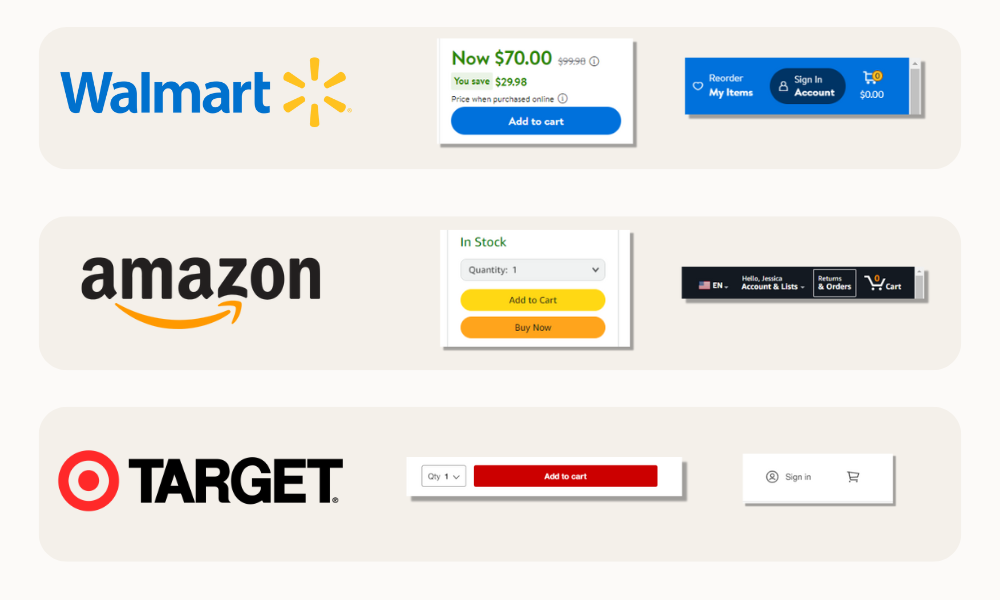

This flow can be applied to the apps of major US retailers like Amazon, Target, and Walmart. The differences lie in slight variations in visual context clues.

These differences can be insignificant, but by harnessing machine learning, Sofy can identify items that follow the same basic pattern. The Target app might have a red “Ship It” button, while Amazon might have an “Add to Cart” button, but the context is the same: the user is attempting to add the item to a cart.

By identifying context clues for the “Add to Cart” button (using similar text or a cart icon), Sofy can infer and create the test case without requiring extra user input.

Example 2: Changes to User Pathways

If a user follows a certain pathway through an app, Sofy can track the dynamic path changes between builds and verifies that these changes do not significantly impact the user experience. Sofy treats these new pathways as test cases, automatically validates that they pass, and reports them as “user experience tests.” This provides information about how many users would be impacted by specific builds and changes.

By automatically creating test cases, Sofy identifies the most important parts of an app and quickly and efficiently provides information about different builds to users.

Benefits of Using Sofy for Mobile App Testing

Sofy offers several advantages through its AI-driven testing platform:

- Scriptless User-Friendly Interface: Sofy provides a user-friendly interface that eliminates guesswork out of complex automated testing and analytics. Anyone can test your app using Sofy’s platform; no coding or development knowledge is required.

- From Manual to Automated Tests: Sofy Co-Pilot can convert manual tests written in plain English into automated test cases without the need for AI training or expertise.

- Real Devices on the Cloud: Sofy offers a cloud-based testing environment with access to over 100 different Android and iOS devices. This allows for comprehensive testing across various device configurations without the need for physical hardware.

- Integrate with your CI/CD Pipeline: Seamlessly integrate your Sofy account with your favorite CI/CD tools like Jira and Datadog, reducing the need to switch between multiple platforms.

By automating test case generation, Sofy identifies critical parts of an application and quickly informs users about different builds, becoming a powerful testing assistant. Sofy integrates with DevOps tools to ensure builds are created without regressions and reports are sent to appropriate locations, speeding up release and testing processes.

Want to see Sofy’s AI-powered testing platform in action? Get a demo today!