Ensuring the reliability and performance of mobile apps across various devices and operating systems presents significant challenges. Traditional testing methods, while effective, often struggle to keep pace with rapid development cycles and the diverse ecosystem of mobile devices. This is where artificial intelligence (AI) steps in, revolutionizing mobile app testing.

AI-powered testing automates repetitive tasks and brings intelligence to the testing process. By leveraging machine learning algorithms, AI can detect patterns, predict potential issues, and adapt to app environment changes, resulting in more efficient, accurate, and scalable testing processes.

In this blog, we’ll explore the comprehensive journey of training AI to better test mobile apps, from collecting and preparing data to training and testing the AI model to accurately identify app issues.

1. Collecting and Preparing Data

Data Collection

To properly train AI models, it’s important to collect both historical and user interaction data, which can include:

- Historical test cases

- Bug reports

- Performance logs

- User clickstreams

- App navigation paths

- Error logs

Data Preparation

Ensure that the data is properly cleaned and labeled. Remove any unnecessary or inconsistent data to ensure the AI model is trained only on high-quality information. Data should be clearly labeled for different types of bugs, performance issues, and test outcomes.

2. Choosing the Right Algorithms

Machine Learning (ML) Models

In machine learning, algorithms can be broadly classified into two categories: supervised learning and unsupervised learning. Both methods have unique approaches and applications for training the AI.

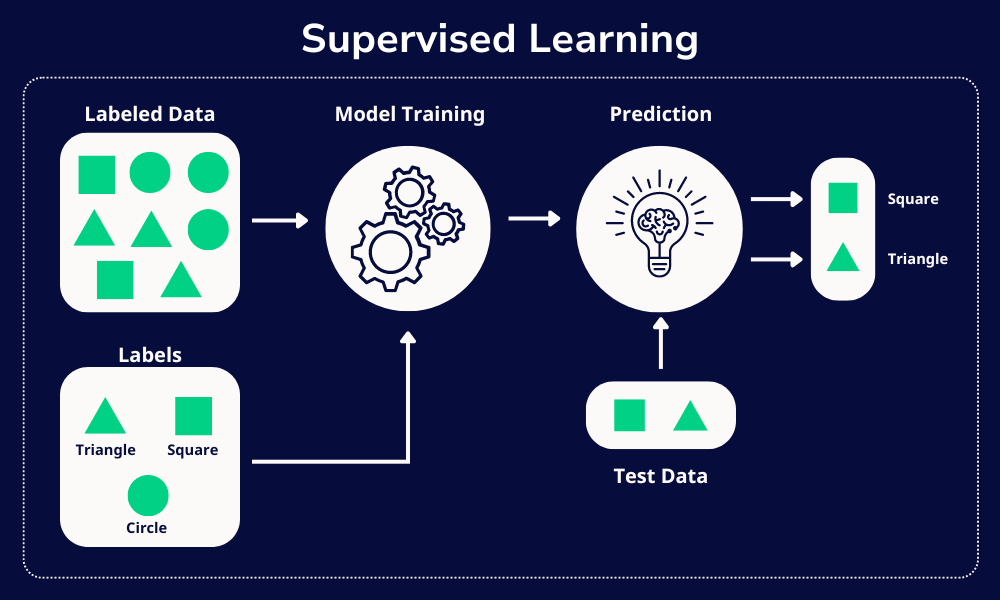

Supervised Learning

Supervised learning involves training the model on labeled data. The algorithm learns an input to output mapping, which can then be used to predict labels for new data. For example, supervised learning can be used to detect spam messages in messaging apps by classifying messages based on word frequency, message length, and sender behavior.

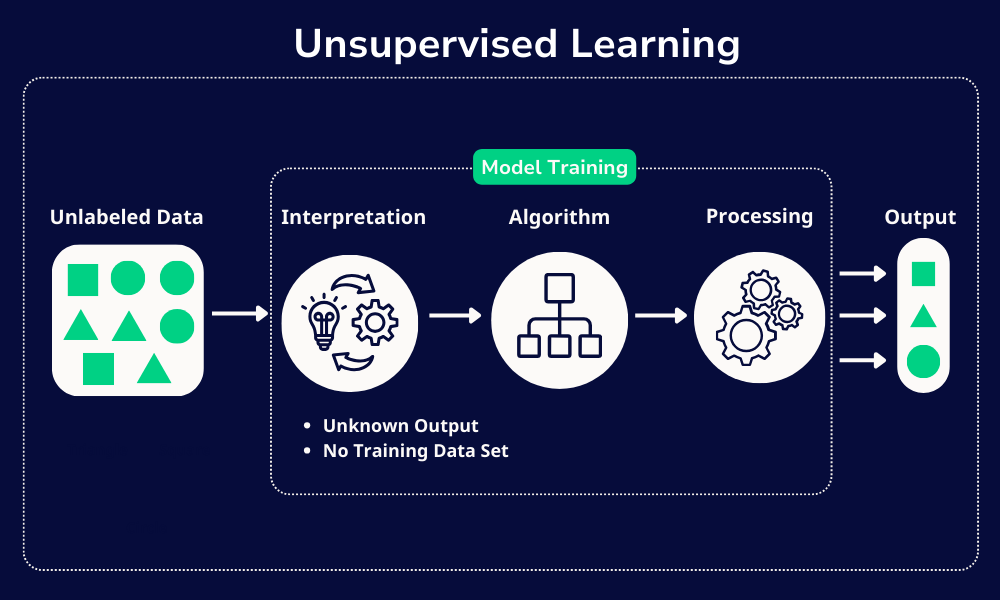

Unsupervised Learning

Unsupervised learning involves training a model on data without labeled responses. The goal is to identify patterns or structures within the data without specific guidance. For example, e-commerce apps can use unsupervised learning to segment users based on shopping behavior, analyzing purchase history, browsing patterns, and cart additions to identify natural groupings of users.

Natural Language Processing (NLP)

NLP can significantly enhance the process of analyzing and generating bug reports in software development. By analyzing collected bug report data, NLP algorithms can generate detailed bug reports that include:

- Bug Summary: A concise summary of the bug, outlining the main issues.

- Steps to Reproduce: Detailed instructions to reproduce the bug, helping developers understand how the bug appears.

- Environment Details: Information about the environment where the bug was encountered, such as device type, operating system, app version, and specific configurations.

- Technical Details: Specific technical information such as error codes, stack traces, and relevant code snippets.

3. Training and Testing the Model

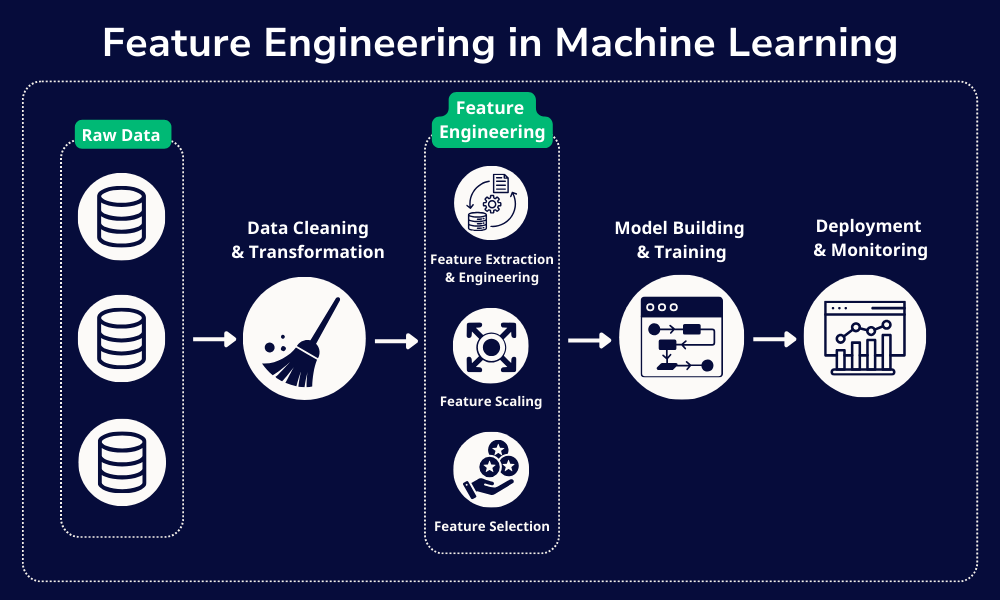

Feature Engineering

Feature engineering is crucial for building effective AI models for detecting bugs and performance issues. This process involves selecting, modifying, and creating new features (also called variables or dimensions) from raw data to enhance the model’s ability to make accurate predictions.

Model Training

Train the AI model using a portion of the prepared data, adjusting parameters to optimize performance. This includes:

- Data Splitting: Split data into training and testing sets (typically 70-80% for training and 20-30% for testing).

- Hyperparameter Tuning: Adjust hyperparameters to optimize model performance.

- Cross-Validation: Use cross-validation to assess how well the model generalizes to new data.

Evaluating the Model’s Performance

To ensure the model performs as intended, evaluate its performance, focusing on issues like overfitting and underfitting. Methods include:

- Validation Set: Use a separate validation set during training.

- Concept Drifting: Evaluate performance metrics over time.

- Real-Time Performance Monitoring: Continuously track model predictions and performance metrics.

4. Implement Continuous Learning

Feedback Loop

A feedback loop involves continuously collecting and integrating feedback from the model’s performance and user interactions. This process includes:

- Continuous Feedback: Feed new test results and user interactions back into the model.

- Real-Time Monitoring: Track key metrics like prediction accuracy and error rates.

- User Reports: Collect and incorporate user reports on bugs and performance issues.

Active Learning

Active learning helps build robust models with less labeled data. This approach includes:

- Iterative Improvement: Continuously refine the model by incorporating new data and feedback.

- User Feedback: Use feedback from QA testers and developers to refine the AI model.

- Performance Metrics: Track key performance metrics to evaluate and improve the AI’s effectiveness.

5. Using Advanced Techniques

Self-Healing Scripts

Self-healing scripts automatically adjust to changes in the application’s UI or functionality. When an element in the UI changes, these scripts detect and update the test script to maintain its accuracy without manual intervention.

Predictive Analytics

Predictive analytics leverages statistical algorithms, machine learning techniques, and historical data to make predictions about future outcomes. This can help in training AI to detect and address potential issues before they impact the user experience.

How Sofy Uses AI for Easy Mobile App Testing

As we’ve discussed in this blog, while AI is making strides and bounds to simplify and streamline the process of mobile app testing, traditional AI training is still a complex and time-consuming process. While effective, this traditional approach can be daunting, especially for teams without specialized AI expertise.

Sofy offers a better solution by simplifying and streamlining the AI-driven mobile app testing process. Here’s how Sofy leverages AI to make mobile app testing easier and more efficient:

- Scriptless User-Friendly Interface: Sofy provides a user-friendly interface that eliminates the guesswork out of complex automated testing and analytics. Anyone can test your app using Sofy’s platform; no coding or development knowledge is required.

- From Manual to Automated Tests: Sofy Co-Pilot can convert manual tests written in plain English into automated test cases without the need for AI training or expertise.

- Real Devices on the Cloud: Sofy offers a cloud-based testing environment with access to over 100 different Android and iOS devices. This allows for comprehensive testing across various device configurations without the need for physical hardware.

- Integrate with your CI/CD Pipeline: Seamlessly integrate your Sofy account with your favorite CI/CD tools like Jira and Datadog, reducing the need to switch between multiple platforms.

Want to see Sofy’s AI-powered testing platform in action? Get a demo today!

Embracing AI for Superior Mobile App Testing

Integrating AI into mobile app testing transforms the process, making it more efficient and precise. By leveraging machine learning, predictive analytics, and self-healing scripts, developers can ensure their apps are robust, reliable, and user-friendly. The steps involved—data collection and preparation, feature engineering, model training, continuous learning, and advanced techniques—create a robust testing framework that reduces manual effort, anticipates potential issues, and ensures high-quality user experiences.