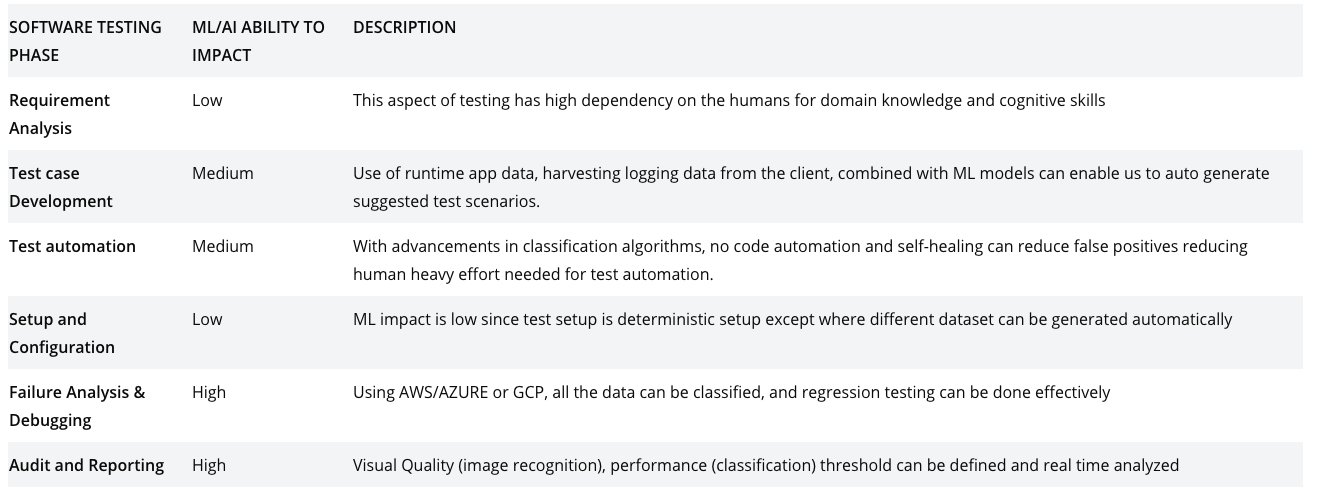

Lately, I have been getting a lot of questions from fellow QA Managers and Software Engineers on how AI/Machine Learning can be applied to transform the traditional testing paradigm in the same way AI/ML has brought transformation to other domains. First, it is important to understand the overall life cycle of testing. The figure below outlines the general test development lifecycle for a new or an existing feature.

Figure 1: Test Development Lifecycle – Human-Centric Approach

Requirement Analysis: This phase is generally about understanding the requirements for a given application, or a feature. Developers, product managers, QA engineers work together to understand the feature set, use cases, implementation, and integration with the existing systems to come up with testing requirements.

Test Case Development: QA engineers review the user experience and create scenarios or test cases to validate the application or feature set. Developers also identify UNIT tests or functional tests depending upon the system architectures.

Test Automation: After test cases are created, developers and QA engineers determine how they can be automated, best approaches to avoid regressions, and increase test coverage to deliver products with higher quality.

Setup, Configuration, Environments: Depending on the type of application, this step can be a significant exercise. During my time at Microsoft Dynamics 365, setting up the test data that trigger different business logic was very critical for effective testing. For the B2C application, the device matrix is critical. QA engineering team needs to use the right data (for a given target market) and build a right validation matrix that drives the overall setup and configuration for validation.

Failure analysis and debugging: This is one of my favorite areas and some of the best QA engineers that I have worked with have natural ability to keep asking “why” questions till they get their answer. Today we’ve enough telemetry available with built-in SDKs like firebase while monitoring and diagnostics systems like Datadaog, Appdynamics that can help identify issues faster and better.

Audit and Report: For most companies, the frequency of product release has become daily or weekly, and hence it is important to understand changes across releases and its impact to ensure these changes are tested. The ability to test while the app is in production has enabled the companies to use a combination of audit/failure analysis to identify issues before it impacts their customers.

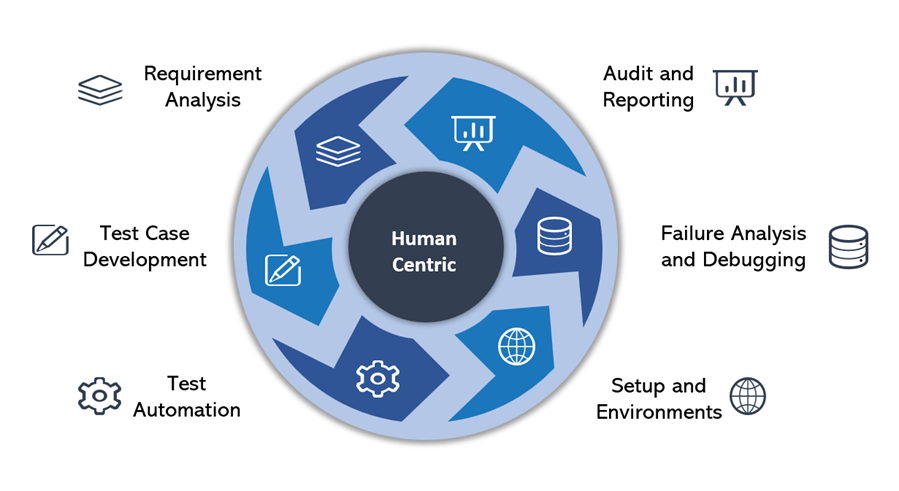

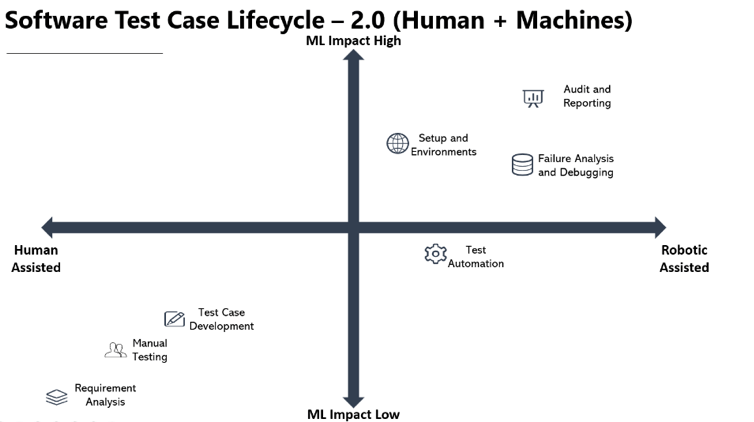

The challenge with the current methodology is that it is human-intensive and does not scale as the pace of software release has accelerated considerably. In addition, ensuring high quality at this pace of change has become challenging and a bottleneck. One way to address these challenges is to teach a robot to perform many of human-centric actions in a testing lifecycle. Advancements in ML and the availability of tools such as Azure ML Studio has made it possible to use Machine Learning to impact the software testing lifecycle. Figure 2 shows the degree to which ML/AI can influence and impact various stages of Software Testing Lifecycle.

Figure 2: How ML impacts Software Testing Life cycle

Figure 3: ML/AI ability to impact Software Testing Lifecycle phases

We at SOFY believe that ML / AI must be used in the context of Software Testing Lifecycle to help improve the productivity of the QA team and increase product quality. In the next series of blog posts – I will walk through each area above in detail and how QA teams can leverage some of the existing techniques to build that in-house ML / AI-powered solution.

You might also find interesting reading these blogs What do Smart Monkeys, Chess, and ML Powered App Crawlers have in common? and Reinventing App Testing Powered by AI. Get a demo today to see just how AI can benefit your QA process.