A lot has changed in the field of software development over the past several decades. We have gone from writing code in machine language to sophisticated programming languages to nocode (LCNC) development, from waterfall to agile methodology, from silos to DevOps, from proprietary APIs to connected APIs, etc. However, we are yet to see the same pace of transformation come to the world of software testing as we continue to rely heavily on humans – be it manual or automated testing. One might ask how automation testing relies on humans, after all the goal of automation is to have machines perform the testing. The issue here is the process of creating automation code is still a human-intensive effort. Needless-to-say, software testing remains human-centric – from test case creation and test case maintenance, diagnosis and reporting, performance testing, etc. For many scenarios, automation is not possible or economically viable, resulting in level of automation plateauing around less than 53% (according to Forrester); with manual testing continuing to take significant time and resources. Just look at software testing budget of any project. Most of spend goes to human labor and very little for tools that increase productivity. It is no surprise that this approach to testing has resulted in software testing becoming a bottleneck to rapid product release that business demands.

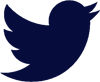

Here is the reality of software application development – the need for speed, expectation of bug-free app in the hands of a finicky customer is at an all-time high while the complexity of app and number of tools and frameworks used by them continues to grow.

Here are some of the challenges of software testing:

- The process of test case development is very tedious, particularly if you consider the number test cases one needs to generate to capture multitude of scenarios and edge cases. Absent a robust method for test cases generation, test coverage of the app will be low.

- Determining how to strike the right balance between manual and automated testing is an agonizing decision every team and every project still must endure. Put too much effort into automation too soon, you end up constantly breaking your test scripts and you make little progress in testing until scripts are finalized.

How much testing to perform with real devices vs emulators and simulators is another one. The question remains how much of the app issues can be detected with real devices vs testing with emulators? How much do you spend on buying devices for your testers’ vs relying on emulators and simulators. With most people working remote, the problem is more acute. - How much and how often to perform non-functional testing during a release? Non-functional testing, being non-deterministic in nature, takes longer time than functional testing and is hard to automate.

- Root cause analysis of a bug requires cognitive capabilities and correlation of multiple datapoints and is a highly skilled effort. How do you leverage historical data or bring to bear community insights to your specific app?

The question then is can AI and ML help address some of the hard problems in software testing.

A Quick intro to Machine Learning

Machine learning (ML) is best described as computer program that learns to perform a task without being explicitly programmed to perform to it. It does so by learning to recognize certain patterns and use them to trigger actions. ML algorithms learn from their data, and then apply them to make informed decisions. The 3 commonly used approaches to ML are supervised learning (which uses pre-labeled datasets for learning), unsupervised learning (in which models are used to extract interesting patterns) and reinforced learning (in which learning is facilitated using a system of rewards).

How can ML solve some of the challenges of Software testing?

AI can help in the following ways – reduce or eliminate the need to write automation scripts, create self-healing scripts that adapts to changes in the app, increase test coverage and ultimately deliver better quality. Here is some concrete ways AI can solve these problems.

- Nocode automation: The challenge of automation is the time, effort and skilled labor required to write automation scripts. What if we can generate automation scripts based on user intent. Better yet what if we can use ML techniques to solve some of the persistent problems of automation, such as lack of resiliency of automation scripts to dynamic content, changes in the UI, variations in device form factors. These self-healing scripts adapt to the changes in the app UI.

- Auto-test case generation is another area ML can help. Using supervised and unsupervised learning, ML algorithms can identify coverage gaps to generate new test cases.

- Visual Quality testing is another area where ML algorithms can identify issues that otherwise cannot be automated such as UI accessibility issues, consistency of the UI across device with different form factors and conformance to UX design standards such as Google Material Design.

- Root cause analysis is also area where ML algorithms can help since they can synthesis vast repositories of data to pinpoint common errors based on symptoms and coding practices.